From Criteria to Confidence: Measuring Soft Skills That Matter

Why Clarity Beats Guesswork in Soft Skills Evaluation

Defining What “Good” Looks Like

Translating Values into Observable Behaviors

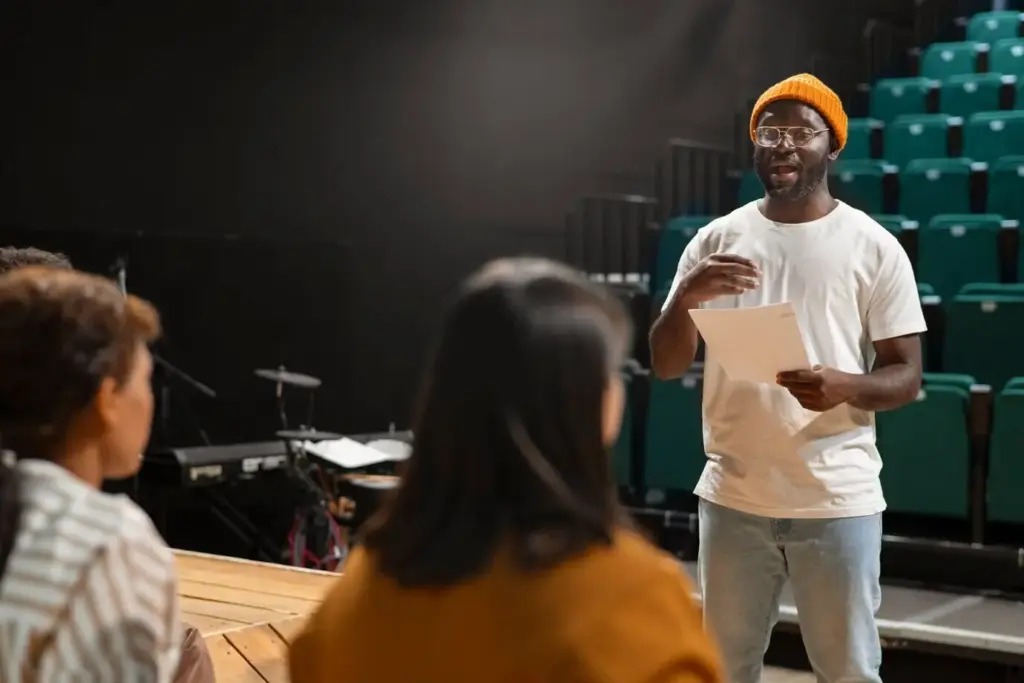

A Brief Story: Two Mentors, One Rubric

Shaping Outcomes That Align With Growth

Designing Rubrics That Teach While They Measure

{{SECTION_SUBTITLE}}

Criteria and Performance Levels That Are Crystal-Clear

Descriptors With Verbs, Conditions, and Quality

Framework Alignment Without Losing Context

Draw inspiration from established references—professional standards, AAC&U VALUE rubrics, or sector-specific competency libraries—then translate them into local practices, community values, and learner needs. Preserve terminology where helpful, but prioritize clarity for your stakeholders. If a framework mentions collaboration, define what that means in your projects: shared documentation, equitable task ownership, and healthy disagreement. Alignment brings credibility; context brings usability. Invite employer partners to review your map for relevance, and ask students to stress-test descriptors in capstones. Iteration ensures the map remains living, inclusive, and trustworthy.

Backwards Design and Curriculum Coherence

Begin with culminating performances—presentations to external audiences, cross-functional teamwork, or ethical decision briefings—and work backward to prerequisites. Decide which course introduces, develops, and assesses each skill at higher levels. Prevent overload by distributing responsibility across the pathway. Use signature assignments to anchor practice and link evidence in portfolios. When coherence replaces chance, learners experience reinforcement rather than repetition. Faculty gain clarity, and assessment data tells a coherent story. Invite cross-course calibration meetings where instructors bring samples, refine indicators, and align expectations for shared outcomes.

Micro-Credentials and Evidence-Rich Badges

Translate milestones into micro-credentials that require authentic artifacts—videos, annotated slide decks, reflective memos, and peer feedback summaries. Each badge should specify criteria, context, and verification methods. Learners curate evidence in portfolios, linking artifacts to descriptors for transparency. Employers appreciate concrete proof of capability, not just claims. Build renewal cycles that encourage continued practice under new conditions, keeping badges meaningful. Publish exemplars to demystify expectations and reduce inequities. Invite alumni to share which artifacts actually influenced hiring decisions, then refine badge requirements to match real-world evaluation.

Collecting Evidence That Tells a Real Story

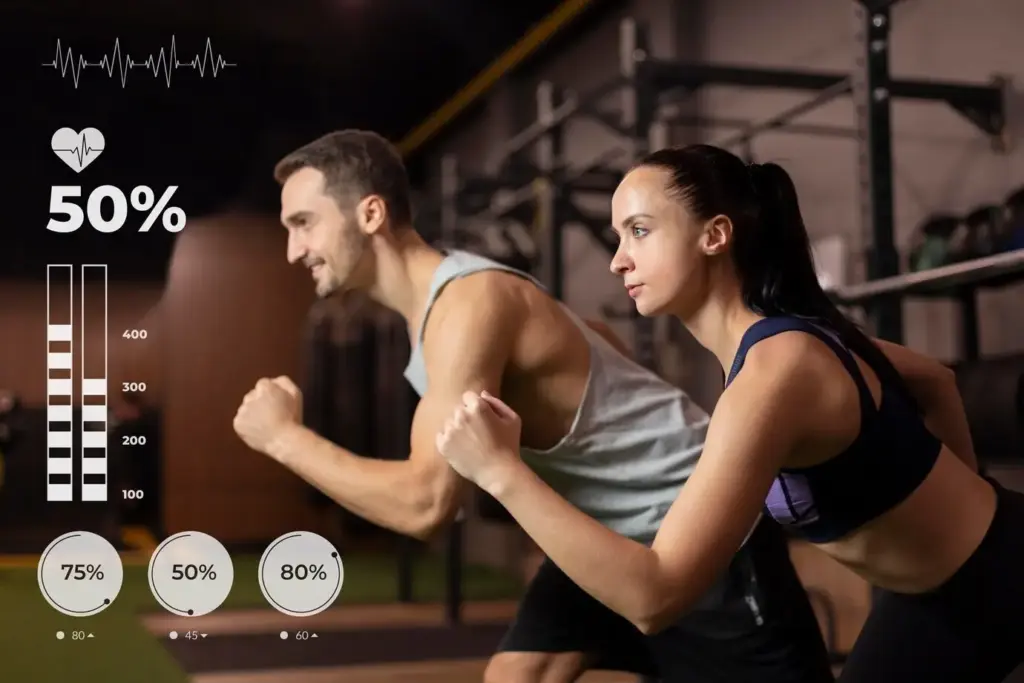

Observations and Simulations With Structure

Portfolios and Reflective Journals

360 Feedback and Peer Review

Calibration, Fairness, and Continuous Improvement

Inter-Rater Reliability Made Practical

Bias Awareness and Inclusive Assessment